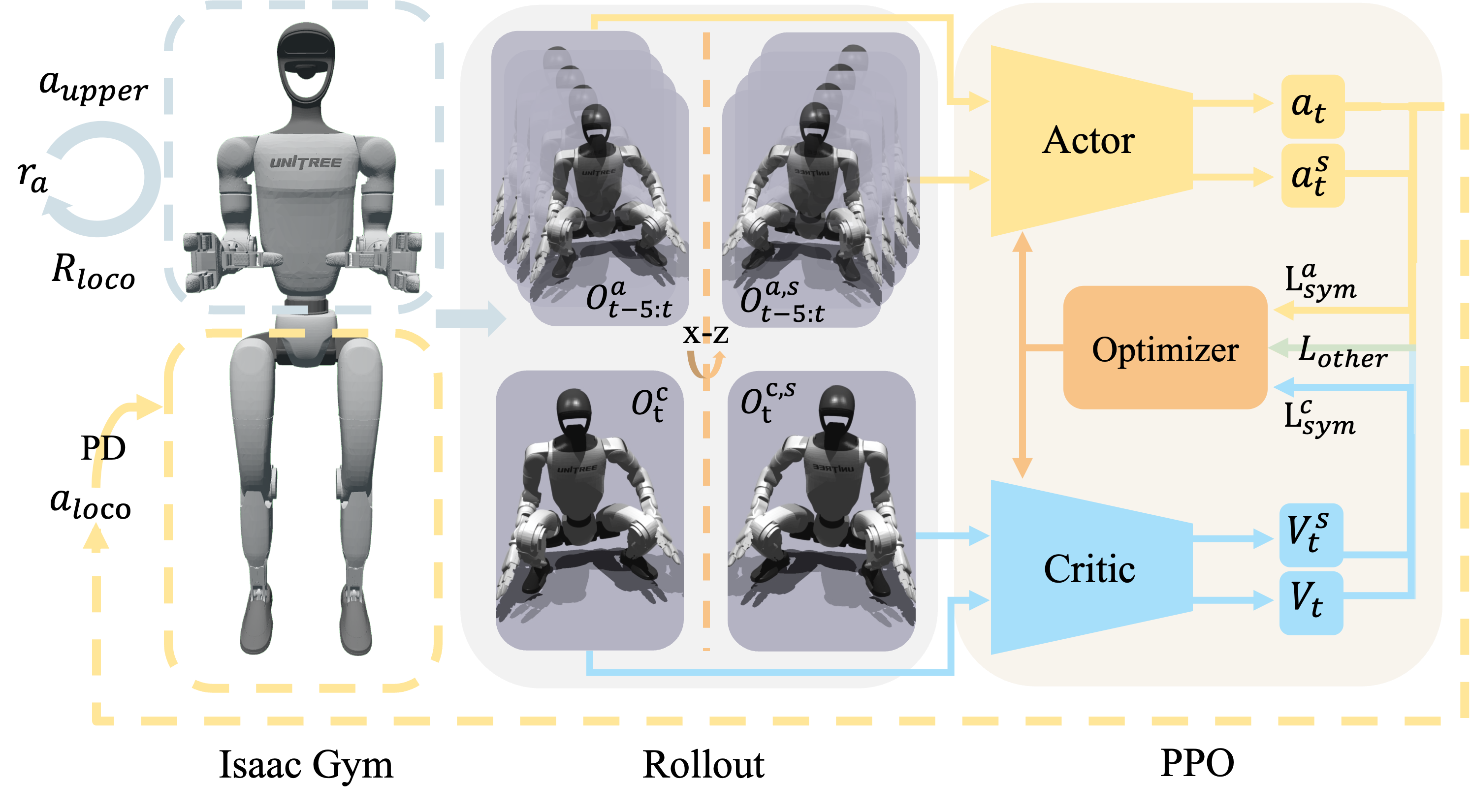

We introduce three core techniques to our RL-based training framework to significantly expand the operational workspce of humanoid robots whereas ensuring robustness of locomotion:

- Upper-body pose curriculum: Enable balance under continuous changing upper-body poses.

- Height tracking reward: Enable the robot to squat to any required heights robustly and quickly.

- Symmetry utilization: Make the robot act more symmetrically & improve data efficiency.

Our framework is totally MoCap-free, resulting in a more efficient pipeline.

Our framework can be used to train different kinds of robots such as Unitree G1and Fourier GR-1.

Unitree G1 trained in Isaac Gym.

Fourier GR-1 trained in Isaac Gym.

After training with our framework on an Nvidia RTX 4090 for only about 3 hours , we can get policies that can be deployed directly in the real world to drive robots walk and squat robustly.

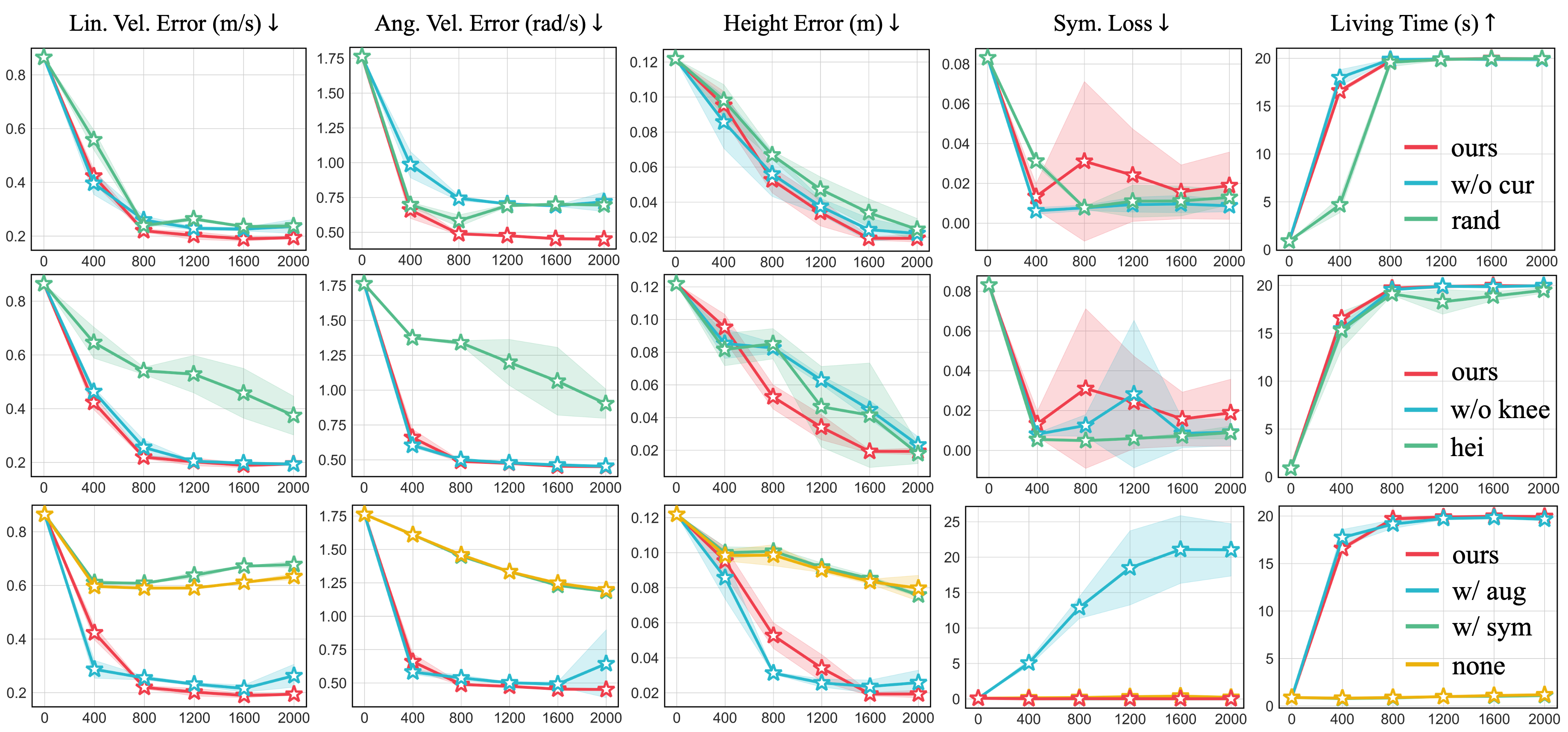

We conduct serval ablation experiments to verify the effectiveness of our framework, and we find:

- Our upper-body pose curriculum can help robots better learn to balance under dynamic upper-body movements gradually than methods without curriculum or with other curriculum style.

- The introduction of novel height tracking reward can accelerate the training for robot squatting.

- The symmetry utilization can both significantly accelerate the training process by over 10 times and guarantee the symmetry of the trained policy.